Cycle 8 2021 - Guidelines for Reviewers

Review criteria

Each proposal contains a cover sheet, a Scientific Justification, and a Technical Justification. Reviewers need to read each of these sections. Note in particular that the Technical Justification often contains a detailed justification of the requested sensitivity, angular resolution, and correlator setup that will be useful in evaluating the proposal.

Reviewers should assess the scientific merit of the proposals to the best of their ability using the following criteria:

The overall scientific merit of the proposed investigation and its potential contribution to the advancement of scientific knowledge.

- Does the proposal clearly indicate which important, outstanding questions will be addressed?

- Will the proposed observations have a high scientific impact on this particular field and address the specific science goals of the proposal? ALMA encourages reviewers to give full consideration to well-designed high-risk/high-impact proposals even if there is no guarantee of a positive outcome or definite detection.

- Does the proposal present a clear and appropriate data analysis plan?

The suitability of the observations to achieve the scientific goals.

- Is the choice of target (or targets) clearly described and well justified?

- Are the requested signal-to-noise ratio, angular resolution, spectral setup, and u-v coverage sufficient to achieve the science goals?

In general, the scientific merit should be assessed solely on the content of the proposal, according to the above criteria. Proposals may contain references to published papers (including preprints) as per standard practice in the scientific literature. Consultation of those references should not, however, be required for a general understanding of the proposal.

Technical and scheduling feasibility.

The ALMA Observing Tool (OT) validates most technical aspects of the proposal; e.g., the OT verifies that the angular resolution can be achieved, verifies the correlator setup is feasible, and provides an accurate estimate of the integration time needed to achieve the requested sensitivity. Reviewers should assume that the OT technical validation of the proposal is correct, and they should not downgrade a proposal merely because of the size of the proposal request . However, the reviewers can and should consider if the requested signal-to-noise ratio, angular resolution, spectral setup, and other aspects of the observations as requested by the PI are sufficient to achieve the scientific goals of the proposal.

Reviewers may contact the Proposal Handling Team (PHT) if they have questions about the observational setup in a proposal. Reviewers in the distributed peer review process may also note any technical concerns of a proposal in their comments to the JAO in the Reviewer Tool, and panel reviewers may also do so by adding a comment to the JAO in the ARP Meeting Tool during the panel meeting. The JAO will evaluate these technical concerns if the proposal is accepted.

Reviewers should not consider the scheduling feasibility in assigning their rankings. The JAO will assess the scheduling feasibility when building the observing queue.

If a proposal is a resubmission of a previously accepted proposal, please consider only the science case in your ranking and review. The JAO will handle any necessary descoping of Science Goals which have already been observed.

Additional review criteria for Large Programs

For Large Programs, in addition to the review criteria above, reviewers should also consider the following criteria:

- Does the Large Program address a strategic scientific issue and have the potential to lead to a major advance or breakthrough in the field that cannot be achieved by combining regular proposals?

- Are the data products that will be delivered by the proposal team appropriate given the scope of the proposal and will the products be of value to the community?

- Is the publication plan appropriate for the scope of the proposal?

Management plan.

The ALMA Proposal Review Committee (APRC) will evaluate the management plans for Large Programs to assess if the proposal team is prepared to complete the project in a timely fashion, both in terms of personnel and computing resources. The management plans will be evaluated only after the APRC has completed the scientific rankings of the Large Programs. The evaluation of the management plan will not be used to modify the scientific rankings. Any concerns that the APRC has about the management of a Large Program will be communicated to the ALMA Director, who will make the final decision on whether to accept the proposal.

Technical and scheduling feasibility.

The JAO will assess the technical feasibility and scheduling feasibility of the Large Programs and report the results to the APRC.

Conflict criteria

The goal of the review assignments is to provide informed, unbiased assessments of the proposals. In general, a reviewer has a major conflict of interest when their personal or work interests would benefit if the proposal under review is accepted or rejected.

There are several ways that JAO will automatically identify major conflicts of interest based on the following criteria1:

- The PI, reviewer, or mentor of the submitted proposal is a PI or co-I of the proposal to be reviewed.

- The PI, reviewer, or mentor of the submitted proposal and the PI of the proposal to be reviewed have been collaborators on any proposal submitted in recent cycles or in the current cycle.

- The PI, reviewer, or mentor of the submitted proposal is at the same institution as the PI on the proposal to be reviewed.

Each reviewer has the responsibility to identify other conflicts of interest that were not automatically detected by the JAO. Reviewers should inform the JAO PHT of any major conflict of interest in their assignments using the Reviewer Tool (for distributed peer review) or the Assessor Tool (for the panel review). In addition to the above criteria, a major conflict of interest occurs when:

- The reviewer is proposing to observe the same object(s) with similar science objectives.

- The reviewer has close personal ties (e.g., family member, partner) to the proposal, that can be clearly recognized even though the proposal is anonymized.

- The reviewer had provided significant advice to the proposal team on the proposal even though they are not listed as an investigator.

A student reviewer participating in the distributed peer review must declare any conflict that applies to either themselves or the mentor. Please work with the mentor to ensure that the conflicts of interest are identified accurately.

Reviewers who identify a major conflict of interest in their review assignments should reject the considered proposal and indicate why they believe a major conflict of interest exists. The PHT will evaluate the reported conflict(s) and, if it is approved, assign a different proposal(s) to the reviewer.

Writing reviews to the PIs

Thoughtful reviews from reviewers can help PIs improve their proposed project and write stronger proposals in the future. Reviews must be written in English.

Reviewers in the distributed peer review process shall provide a scientific review and a rank for each proposal. The reviews will be sent anonymously to PIs without any editing by the JAO, along with the rank each reviewer provided. If reviewers participate in Stage 2, then the revised reviews and ranks will be sent to the PIs; if not, the Stage 1 reviews and ranks will be sent.

In the panel review process, Primary Assessors shall prepare consensus reports based on the discussion of the proposal at the panel meeting. Consensus reports will be sent to the PIs.

Below, we provide some guidelines to assist reviewers in writing useful reviews

Guidelines

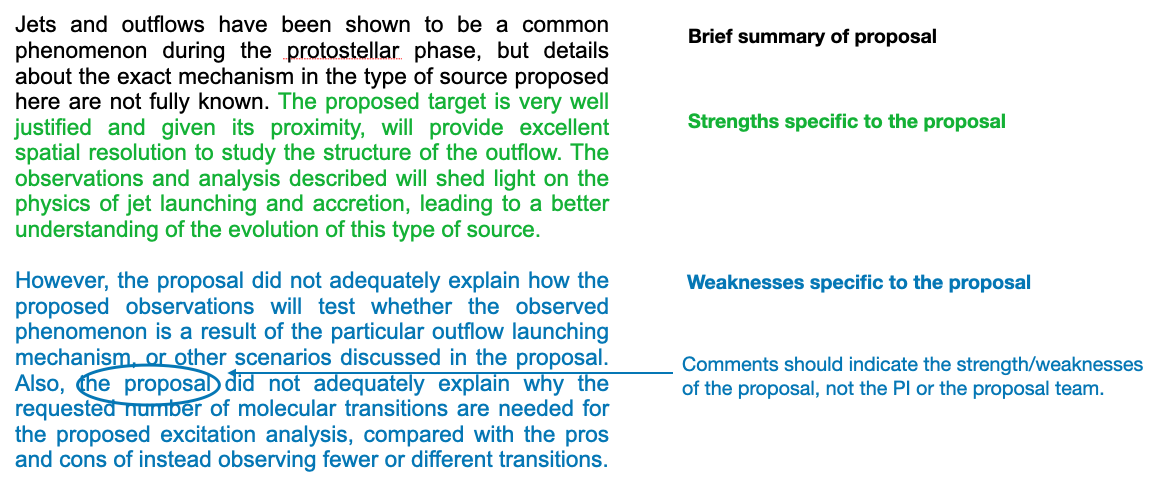

1. Summarize both the strengths and weaknesses of the proposal.

- A summary of both the strengths and weaknesses can help PIs understand what aspects of the project are strong, and which aspects need to be improved in any future proposal.

- Reviews should focus on the major strengths and major weaknesses. Avoid giving the impression that a minor weakness was the cause of a poor ranking. Many proposals do not have obvious weaknesses but are just less compelling than others; in such a case, acknowledge that the considered proposal is good but that there were others that were more compelling.

- Take care to ensure that the strengths and weaknesses do not contradict each other.

2. Be objective.

- Be as specific as possible when commenting on the proposal. Avoid generic statements that could apply to most proposals.

- If necessary, provide references to support your critique.

-

All reviews should be impersonal, critiquing the proposal and not the proposal team. For example, do not write "The PI did not adequately describe recent observations of this source.", but instead write "The proposal did not adequately describe recent observations of this source.”.

- Reviewers cannot be sure at the time of writing reviews whether the proposed observations will be scheduled for execution. The reviews should be phrased in such a way that they are sensible and meaningful regardless of the final outcome.

3. Be concise.

- It is not necessary to write a lengthy review. An informative review can be only a few sentences in length if it is concise and informative. But, please avoid writing only a single sentence that does not address specific strengths and weaknesses.

4. Be professional and constructive.

- It is never appropriate to write inflammatory or inappropriate comments, even if you think a proposal could be greatly improved.

- Use complete sentences when writing your reviews. We understand that many reviewers are not native English speakers, but please try to use correct grammar, spelling, and punctuation.

5. Be aware of unconscious bias.

- We all have biases and we need to make special efforts to review the proposals objectively. A discussion of unconscious bias is provided here.

6. Be anonymous.

- Do not identify yourself in the reviews to the PIs. In case of distributed peer review, these reviews will not be checked and edited by the JAO. They will be sent verbatim to the PIs, and they will also be shared with other reviewers during Stage 2.

- Do not spend time trying to guess who is the proposal team behind the proposal you are reviewing. Your review should be based solely on the scientific merit of the proposal. The identity of the team behind it has no relevance for your review. For dual-anonymous guidelines click here.

7. Other best practices.

- Do not summarize the proposal: The purpose of the review is to evaluate the scientific merits of the proposal, not to summarize it. While you may provide a concise overview of the proposal, it should not constitute the bulk of the reviews.

- Do not include statements about scheduling feasibility. If there are any scheduling feasibility issues with the proposal, the JAO will address them directly with the PI.

- Do not include explicit references to other proposals that you are reviewing, such as project codes.

- Do not ask questions. A question is usually an indirect way to indicate there is a weakness in the proposal, but the weakness should be stated explicitly. For example, instead of "Why was a sample size of 10 chosen?" write "The proposal did not provide a strong justification for the sample size of 10."

- Do not use sarcasm or any insulting language.

8. Re-read your reviews and scientific rankings.

- Once you have completed your assessments, re-read your reviews and ask how you would react if you received them. If you feel that the reviews would upset you, revise them.

- Check to see if the strengths and weaknesses in the reviews are consistent with the scientific rankings. If not, consider revising the reviews or the rankings.

Example review

Unconscious bias

Unconscious bias in the review process occurs when a reviewer holds a bias (of which they are often unaware) in favor of, or against, a proposal for reasons other than scientific merit. Because these biases are a result of our own culture and experiences, all reviewers are influenced by unconscious bias. Examples include gender, culture, age, prestige, language, and institutional biases.

ALMA has found systematics in the proposal rankings in Cycles 0-6 that may indicate bias exists in the proposal review process (Carpenter 2020; see also Lonsdale et al. 2016). Similar studies have been published by Reid (2014) in an analysis of Hubble Space Telescope (HST) proposals and by Patat (2016) for ESO proposals.

The ALMA proposal rankings have been correlated with the experience level of a Principal Investigator (PI) in submitting ALMA proposals, regional affiliation of the PI (Chile, East Asia, Europe, North America, or other), and gender (based on genders commonly associated with first names). The analysis was conducted for both the Stage 1 proposal rankings, which are based on the preliminary scores from the reviewers, and the Stage 2 proposal rankings, which are based on the final scores from the reviewers after participating in a face-to-face panel discussion. The main findings of the study are as follows:

- PIs who submit an ALMA proposal in multiple cycles have systematically better proposal ranks than PIs who have submitted proposals for the first time.

- PIs from Europe and North America have better proposal rankings than PIs from Chile and East Asia.

- Proposals led by men tend to have better Stage 1 rankings than proposals led by women when averaged over all cycles. This was most noticeably present in Cycle 3 but no discernible differences by gender are present in subsequent cycles. Nonetheless, in each ALMA cycle to date, women have had a lower success rate than men in terms of having their proposals added to the observing queue even after differences in demographics by gender are considered.

- Comparison of the Stage 1 and Stage 2 rankings reveal no significant changes in the distribution of proposal ranks by experience level or gender as a result of the panel discussions. The proposal ranks for East Asian PIs show a small, but significant, improvement from Stage 1 to Stage 2 when averaged over all cycles.

- Any systematics in the proposal rankings were introduced primarily in the Stage 1 process and not from the face-to-face discussions.

While the origins of these systematics are uncertain, unconscious bias in the review process should not be discounted as a contributing factor.

ALMA is committed to awarding telescope time purely on the basis of scientific merit. Therefore, ALMA would like to make reviewers aware of the role that unconscious bias can play in the review process2. Reviewers should also recognize that English is a second language for many, if not most, PIs. ALMA reminds the reviewers to focus their reviews on the scientific merit of the proposals.

In Cycle 7, two changes were introduced to the proposal cover sheet in order to reduce potential biases in the proposal review process. First, the investigators were listed with the initial of the first name and the full surname. Second, the list of investigators on the cover sheet was randomized. The Science Assessors could see the team that was behind the proposal but could not identify who was the PI. When analyzing the Cycle 7 ranks after such changes we saw:

- First-time PIs continued to have the poorest overall ranks, but the ranks of second-time PIs were indistinguishable from the ones of more experienced PIs.

- Ranks of PIs from Chile improved in comparison to Europe and North America, while the ranks of PIs from East Asia presented no changes.

- There was no significant change in the case of gender.

More cycles are needed before any firm conclusions on the origin of these systematics and possible biases can be made. Nonetheless, with the aim of reducing any biases as much as possible, ALMA has adopted dual-anonymous peer review starting in Cycle 8, where the proposers will not know who reviewed their proposal, and reviewers will not know who is part of the proposer team.

Dual-anonymous

Please refer to the dual-anonymous guidelines for reviewers for guides on how to approach the proposal review under the new dual-anonymous policy, and for a description on the procedure to follow in case you find a problem with anonymization.

Guidelines for Mentors

Reviewers participating in the distributed review process who do not have a PhD are required to have a mentor who will assist with the proposal review. The mentors are specified in the ALMA Observing Tool when preparing the proposal. In general, the role of the mentor is to provide whatever guidance is necessary for the reviewer during the review process. They are expected to interact with the process purely via the reviewer; for instance, mentors do not have access to the Reviewer Tool.

Specific roles of a mentor include:

- Work with the reviewer to declare any conflicts of interest on the assigned proposals. The conflicts of interest criteria apply to both the reviewer and the mentor.

- Provide advice to the reviewer as needed on the scientific assessment of the proposals.

- Provide guidance to the reviewer on providing constructive feedback to the PIs.

Code of conduct and confidentiality

All participants in the review process are expected to behave in an ethical manner.

-

Reviewers will judge proposals solely on their scientific merit.

-

Reviewers will be mindful of bias in all contexts.

-

Reviewers will declare all major conflicts of interest.

-

The proposal reviews will be constructive and avoid any inappropriate language.

All proposal materials related to the review process are strictly confidential.

-

The assigned proposals may not be distributed or used in any manner not directly related to the review process.

-

Any data, intellectual property, and non-public information shown in the proposals may be used only for the purpose of carrying out the requested proposal review.

-

The assigned proposals and the reviews may not be discussed with anyone other than the JAO Proposal Handling Team, the review panel or the assigned mentor where applicable.

-

All electronic and paper copies of the proposal materials must be destroyed as soon as a reviewer completes the proposal review process.

1 Note that the automatic conflict identification is only as correct and complete as allowed by the information recorded in the ALMA user profile of the involved users (PIs, reviewers, mentors). Please take special care to keep your user profile as up to date as possible by the time of proposal deadline.

2 For more information on unconscious bias and how it can affect the review process, reviewers are encouraged to read the Unconscious bias training module from the Canada Research Chairs here.

Return to the main ALMA Proposal Review page